Consider coordination in these four domains:

How do groups of humans make a decision?

How do parallel predictions in the mind decide what to do?

How do multiple biological cells act as one organism?

How do parts of AI systems work together?

All of these systems have similar structure:

Decentralized information integrating.

Parts forming a whole.

This is what I think alignment is: the cooperation of smaller parts to build bigger agents.

This unifies my two primary interests in the last 2y: AI alignment and Human alignment. Because Human alignment ⇔ AI alignment ⇔ Alignment.

The goal? Unblock alignment on all scales.

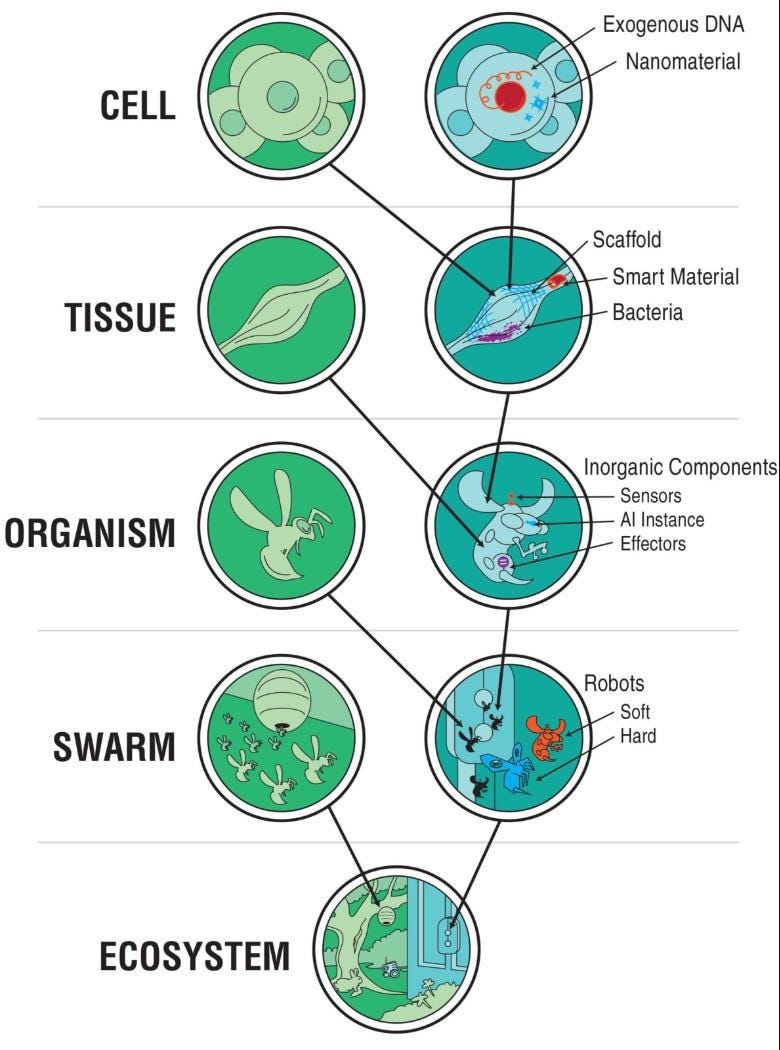

A recursive process of parts forming ever larger wholes:

How does it work?

Thanks to Alex Zhu, Adam Goldstein, Ivan Vendrov, Xiq, and Stag Lynn for conversations.

Terms discussed in this post: superagent, subagents, collective intelligence, hivemind, feeling like part of a greater whole, community feeling; boundaries, autonomy, causal distance; scale-free alignment, multi-scale alignment.

Further Reading

Hierarchical Agency: A Missing Piece in AI Alignment by Jan_Kulvet

Towards a scale-free theory of intelligent agency by Richard Ngo

Michael Levin, e.g.: Technological Approach to Mind Everywhere (TAME) framework:

See all posts tagged “AI” on this blog, especially:

Alignment first, intelligence later

Now that Softmax, my favorite new AI company, is public, I can finally share this. They’ve funded my research and I’m very excited about what they’re doing!